Artificial Intelligence and IT Security

The department Cognitive Security Technologies conducts research at the intersection between artificial intelligence (AI) and IT security.

The focus is on two aspects:

Applying AI methods in IT security

State-of-the-art IT systems are characterized by a fast-growing complexity. The development of current and future information and communication technology introduces new, previously unexpected challenges: Starting with the increasing connectivity of even the smallest communicating units and their merging into the Internet of Things, continuing with the global connection of critical infrastructures to unsecured communication networks, and ending with the protection of digital identities: People are faced with the challenge of ensuring the security and stability of all these systems.

To keep up with this fast-paced development, IT security needs to further develop and rethink automation. The research department Cognitive Security Technologies develops semi-automated security solutions that use AI to support humans in investigating and securing security-critical systems.

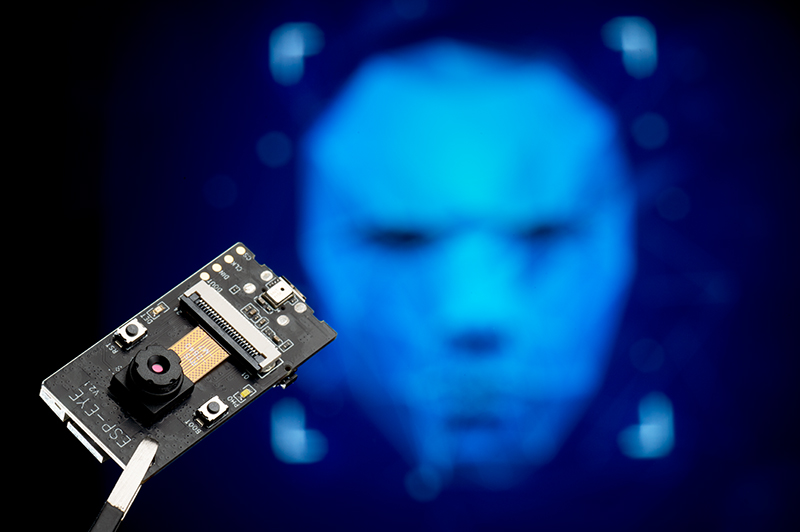

Security of machine learning and AI algorithms

Just like conventional IT systems, AI systems can also be attacked. For example, through adversarial examples, it is possible to manipulate AI for facial recognition. Attackers can thus gain unauthorized access to sensitive access management systems that rely on AI systems. Similar attack scenarios also affect the field of autonomous driving, for example, where humans must rely on the robustness and stability of assistance systems.

One field of research of the department Cognitive Security Technologies at Fraunhofer AISEC it the exploration of those vulnerabilities in AI algorithms and solutions to fix them. Furthermore, the department offers tests to harden such AI systems.

Fraunhofer Institute for Applied and Integrated Security

Fraunhofer Institute for Applied and Integrated Security